"Friends, Romans, countrymen, lend me your ears"

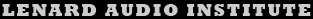

The outer ear extends as far as the ear-drum, a pressure-sensitive membrane. Beyond this point is the middle ear, in which three tiny bones transmit and amplify the vibrations of the eardrum. Beyond the middle ear is the inner ear, filled with liquid and contains the most intricate structures of all: the spiral-shaped cochlea, where sound is converted to nerve impulses, and the semicircular canals, the organs for our sense of balance.

The outer ear flap 'pinnae' surrounds each hole in the side of the skull and leads into the ear canal. This irregular cylinder averaging 6mm diameter and approx 25mm long narrows slightly, then widens towards its inner end, which is sealed off by the ear-drum. This shape and the combination of open and closed ends combine to make the canal look like an organ pipe shaped tube enclosing a resonating column of air. The ear canal supports sound vibrations and resonates at frequencies which human ears hear most sharply. This resonance amplifies the variations of air pressure that make up sound waves, placing a peak of pressure directly at the ear-drum. At frequencies between 2K - 5K Hz, the pressure at the ear-drum is approximately double the pressure at the open end of the canal.

The buzzing of a mosquito is less than one quadrillionth of a watt. Pressure movement less than the diameter of a hydrogen molecule causes the ear-drum to vibrate and can be heard. Sound 10 million million (1,000,000,000,000) times larger (short duration) will not damage the hearing mechanism. We can discriminate 400,000 approx sounds, (magnitudes greater in bandwidth than our eyes) and recognize a voice blurred by telephone. Hearing extends over a frequency spectrum of 3 decades (10 octaves) whereas sight is limited to less than 1/3 octave of the electro-magnetic spectrum.

Softly speaking Indigenous people living in remote areas isolated from traffic and amplified sound where the majority background sound, except for birds and periodic thunder, is about one-tenth of a refrigerator. They can hear a soft murmur across a clearing the size of a football field, and locate its source. They suffer little to no loss of acuity with age.

Hearing test

Of all our links to the outside world, hearing is the essential sense that makes us human. Without hearing we cannot master language, unless with heroic effort. Hearing with speech gives us capacity to communicate, to use and pass on knowledge and badly rule a planet. Hearing is the only sense that remains active every moment of our lives and cannot be negated when asleep or under anaesthetic. But in our consumerist image driven world, when asked to select the most precious of the five senses, few people would name hearing. Paraphrased from S.S.Stevens (1906-1973).

Brain hemispheres

Our mind is divided in 2 hemispheres which function in parallel. The hemispheres work together for almost every task. The specialties that each side possess make it easier for both sides to work together. For most right handed people, the right sensory system attends to detail whereas the left sensory system generalises context (at the same time). The hemispheres can be reversed for sensory perception at distances beyond arms length. This sometimes creates interesting illusions or confusion. Reading a book within arms length compared to reading a road sign at long distance.

This difference between the hemispheres is exaggerated when driving a vehicle with one eye closed then the other. One eye provides information to the brain hemisphere that discerns points of detail, whereas the other eye provides information to the brain hemisphere that processes general information on direction of road and traffic. Also many people tend to drive faster in fog white-out but slower in black-out. Model plane enthusiasts get confused when their plane hits the ground, and say "I couldn't see the ground coming up". Lunar pictures of crater/hill randomly reverse, similar to Escher drawings.

Trying to understand what someone is saying in a crowded noisy room by turning or closing one ear, then the other. Changing a telephone from one ear to the other, then comparing the difference to speaking with earphones over both ears. The experience of communication changes. Melody voice and higher may be referenced to brain hemisphere of the right, whereas rhythm backing and lower tones may be referenced to left. This can be used as an advantage when panning a voice toward the right to give an association of closeness or separateness and left to be associated with rhythm and backing.

Synesthesia

Creating sound for Cinema requires understanding Synesthesia (one sensory system affecting another). Warm-colours associated with foreground as skin tones, red blood or pale yellow with deeper sounds of breathing, heartbeat and bass rumble etc. Cold-colours associated with background as white snow, blue sky and green hills with higher auditory tones of wind noise, chirping birds or screeching vehicle chases etc. Biasing sounds with spatial movement then reversing it for added effect.

The above information on perception is generalised and subjective. Colour does not exist in nature, but as a pigment of our imagination. Music and language cannot be described by its atomic structure. Evolving over millions of years, this beautiful subjective function of the cross integration of the sensory nervous system, reflects our perception of reality, resulting in music, language and consciousness of existence. The gift that makes us human. But the gift that makes us human also gives us arrogance, to create Gods in our own image, to justify greed betrayal and the delusional belief in magical cables, audiophile brand names, and over compressing recorded music.

Auditory perception

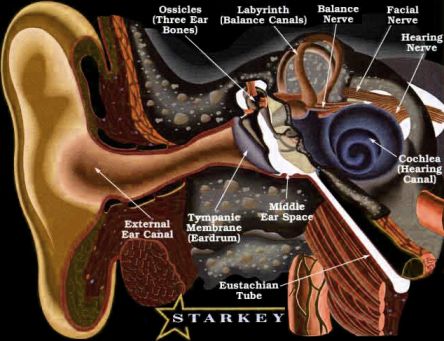

The above graph shows that at low level our hearing is most sensitive at frequencies between 1k - 4k Hz approx which is 1,000,000 (60dB) more sensitive than at bass frequencies eg 40Hz. Phons represent our hearing experience at different sound levels, described as equal loudness curves. At high sound levels we tend to experience all frequencies at a similar level. The sensitivity of our hearing reduces with increased level and so does the apparent frequency response. Muscular compression for the protection of the inner ear begins at approx 84dB SPL.

Sound direction The below pics show that at high frequencies the direction of sound is detected simply by amplitude (loudness) difference between our ears.

At low frequencies the wave lengths are so long that both ears will hear sound at similar level. Therefore below 1k Hz the direction of sound is by the phase difference in the wave between our ears. We are able to detect sound direction to approx 100Hz in a free field. But in a reverberant cave or room this is limited to approx 300Hz because the bass energy is reflected from all directions.

There is a small error area between 1k Hz and 4k Hz where it is difficult to detect the direction of sound. Locating a chirping cricket can be difficult if not impossible. Most animals can swivel their outer ears to locate the source of a sound, but the few humans who can do this use this skill to amuse children. Why is it not possible to detect sound direction at the most sensitive frequencies of hearing ?

One explanation is that frequencies between 1k - 4k Hz are required for speech intelligibility, for which both mental hemispheres are required. Nearly all single natural sound sources are broad spectrum, which allows each brain hemisphere to process comparative differences of frequencies either side of 1k - 4k Hz, to achieve sound location. This may have evolved to help us to detect hungry predators, and at the same time warn others. However after the first moment of direct sound, localisation processing is ignored for approx 35ms. This may have evolved to help us not to be confused with echoes from cave walls and ceiling within 10m / 30ft.

Transients allows us to immediately localise the source of a sound. A hungry carnivore stepping on twigs creates small sharp transient clicks, which can be simulated with clicks in headphones. Two clicks heard at the same time and level in both ears, appears as a single fused centre click. As one click begins to lead the other from approx 0.3ms the fused click appears to shift toward the position of the leading click. From 0.65ms the fused click appears to come directly from the position of the leading click only. From 3ms (1m / 344Hz) the illusion of fusion ceases and the 2 separate clicks are heard.

- 0 - 0.3ms (100mm 3k4Hz) single sound centred between clicks.

- 0.3 - 0.65ms (224mm 1k5Hz) single sound shift toward leading click.

- 0.65ms - 3ms (1000mm 344Hz) single sound heard from leading click only.

- 3ms sound is heard as 2 separate clicks.

The above pic shows a perception of left - right shift that occurs when the same sound as a click is played through both speakers. The listener at centre between the speakers, hears a single click as if from the centre. As the click from one speaker is advanced in time, the illusion is that the click which was heard from the centre, now moves to side of the speaker that is producing the advanced click. However as the click is further advanced the illusion that the click is coming from the advanced side stops, and we then hear the separate clicks from each speaker. This test is most noticeable with headphones but limited with speakers, which are environment and position dependant.

In a classroom this demonstration opens up discussion and possible use to obtain a left - right bias of an instrument in a stereo recording by delaying sound to one side. This appears to be most effective with instruments of high transients. Each student needs to practise this process with headphones and speakers to understand the limitations. Notice how this effect is reduced or lost when excessive compression/limiting is applied to the instrument. Once understood then deciding what to do for creative applications.

Localisation Most research on sound localisation is done with small passive speakers as in the above pic. This research is mostly used for applications of understanding left-center-right positioning from different mic techniques. The ultimate quest is to create a 5.1 cinema surround illusion (with cleaver software) from only two small front speakers that give the illusion of sound coming from different directions other than speaker placement.

Time shifts and phase effects between speakers does create interesting and novel illusionary effects, but at the cost of reducing fidelity, and requiring the listener to be at a precise centred position. The contradiction to this research is that the majority of pop music and B grade cinema sound is recorded with excessive compression that removes transients and other detail that allows for direction of hearing.

Diana Deutsch - Audio Illusions

Youtube - 42 Audio Illusions & Phenomena!

Reciprocity of recording and playback chains

There are many web sites books and periodicals on the application and technicalities of microphones. This page is not intended to repeat information but provide a basic understanding for creative results to be achieved with microphones in the amplifying recording process. All who are passionate about music must be perplexed by the inconsistencies within amplified and recorded music. These inconsistencies are mostly the result of technical ignorance of the reciprocal nature of the recording and playback chains.

The application of a microphones can only understood in terms of how the result is heard by the listener through loudspeakers in a mostly chaotic unknown listening environment. This can be a distorted PA system at a live concert, in a large sports stadium concrete echo box, or a small low fidelity home cinema system in an excessively reverberant lounge room, or an audiophile stereo system in a correct acoustically dampened room. Last but not least is the musical discernment, or lack of, attached to a pair of ears.

The above vector analogy shows each 3 factors in the recording and playback chains that must be taken into consideration. The microphone is at the beginning of the recording chain, and the listener is at the end of the playback chain. The 2 triangles are therefore reciprocals of each other. The imaginary ideal is for both triangles to line up, which is impossible to achieve.

We must accept that the recording and playback mediums are entirely different. The objective is not to get caught up in believing that the listener can experience being at the performance when originally recorded, but for the listener to experience the performance as a reflection, as though it is alive. To achieve a recorded performance to appear alive requires understanding the content in both triangles.

"A l i v e"

First we must understand what is meant by alive as separate from real. A recording has the capacity to contain greater detail with all instruments being heard at close range, which is impossible to hear at a concert when seated in the back rows. Listening to an opera in the back stalls may be real but it can sound completely dead.

Recording music has the capacity to sound more alive than actually being at the real concert. However there are some conditions that must be set. Instruments and voices can be animated with extended detail that is not available in the natural world. It is also not possible for any of us to listen to a world class opera singer within a meter or two. But we are able to listen to a microphone placed within a very close distance and hear the expression and detail that would not be heard at a concert sitting at a distance, with a lot of people coughing and rustling around us.

Reverberation-ion-ion-ion-ion-ion-ion

Because our ears are 180deg apart enabling us to hear sounds almost perfectly from every direction, any reverberation bouncing from walls and ceiling from different directions destroys localisation and 3D depth of field of the music. Therefore forward stereo 3D from speakers can only be heard in a non-reverberant environment. The visual equivalent of acoustic reverberation is a room full of distorted mirrors making it impossible to judge direction or position of anything seen.

After the first moment of direct sound or transients from the speakers, localisation information is ignored for approx 35ms. With excessive reverberation that extends beyond 35ms it will not be possible to detect direction or depth of field within the music. Also Stereo imaging is rarely attempted in live productions because of the excessive reverberation of most venues.

Compression Over compression of recorded music is similar to reverberation remaining at a constant level. Over compressed music has little to zero spatial information. Repeat: Left right localisation and depth of field from recorded music is dependant on minimal compression and listening in a non-reverberant environment.